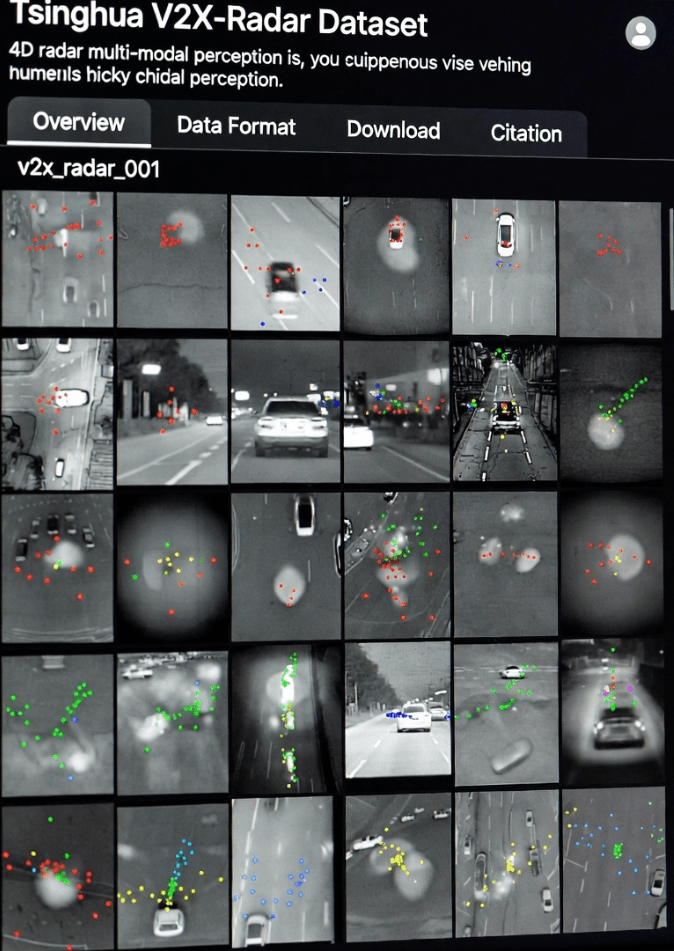

Autonomous driving is progressing rapidly, but environmental perception remains a critical challenge. Single-vehicle solutions are often limited by sensor blind spots and detection range, making it difficult to handle dense traffic, unusual scenarios, or extreme weather conditions. To overcome these limitations, the Tsinghua University Vehicle and Mobility Institute, together with several collaborators, released the V2X-Radar dataset at NeurIPS 2025. It is the first large-scale dataset using 4D radar for vehicle-to-everything (V2X) perception, enabling multi-modal sensor fusion and demonstrating significant advantages in complex environments (Tsinghua V2X-Radar official page).

The dataset represents a shift from traditional single-vehicle perception toward collaborative perception. While most autonomous vehicles rely on sensors such as LiDAR, cameras, and millimeter-wave radar, each sensor type has inherent weaknesses. LiDAR provides precise 3D structure, but its performance deteriorates in rain, fog, or snow. Cameras capture rich semantic information but depend on lighting and are often less effective at night or under strong backlight. Integrating data from vehicle-mounted sensors with roadside infrastructure allows a more complete and dynamic understanding of the environment, enabling vehicles to perceive areas they could not detect independently (IEEE Transactions on Intelligent Transportation Systems).

Existing V2X datasets, such as OpenV2V and DAIR-V2X, generally lack 4D radar data, limiting research into multi-modal fusion. The V2X-Radar dataset addresses this gap by incorporating 4D radar alongside LiDAR and multi-view cameras, allowing researchers to explore deep spatio-temporal fusion for collaborative perception. This approach improves detection in long-tail scenarios, such as partially occluded pedestrians or vehicles at complex intersections.

Key Features of V2X-Radar

Multi-Modal Sensor Fusion

Vehicle and roadside units in V2X-Radar include LiDAR, 4D radar, and multi-view cameras, forming complementary perception capabilities. Each sensor contributes unique strengths:

-

LiDAR produces dense point clouds, capturing object geometry with centimeter-level precision.

-

4D Radar operates at 77/79GHz, penetrates rain, fog, and snow, and provides velocity information from sparse point clouds (Linpowave 4D Radar Technology).

-

Cameras deliver high-resolution RGB images, adding semantic context and enabling recognition of traffic signs, lane markings, and pedestrian gestures.

Experiments demonstrate that LiDAR slightly outperforms radar in clear weather, but 4D radar maintains better stability in adverse conditions, with 1–2% higher mAP. These results confirm the value of multi-modal sensor fusion in real-world autonomous driving.

Diverse Scenario Coverage

Data was collected over nine months in multiple environments, including university campuses, public roads, and controlled test sites. The dataset covers:

-

Weather conditions: clear, rainy, foggy, snowy

-

Times of day: daytime, dusk, nighttime

-

Complex intersections: cross streets, T-junctions, and occluded campus areas

Roadside cameras capture pedestrians or cyclists hidden from vehicle-mounted LiDAR, providing valuable data for long-tail scenario training (MIT CAV Dataset). These scenarios are crucial for developing robust autonomous driving algorithms that can handle edge cases effectively.

Multi-Task Support

V2X-Radar is divided into three subsets to support different research directions:

-

V2X-Radar-C: collaborative 3D object detection combining vehicle and roadside data

-

V2X-Radar-I: roadside-only perception

-

V2X-Radar-V: vehicle-local perception

Collaborative models such as V2X-ViT achieve 53.61% mAP when fusing LiDAR and 4D radar, significantly outperforming single-modality LiDAR models (47.23%). This demonstrates that multi-modal fusion improves perception robustness, particularly in complex or occluded environments.

Sensor Synchronization

Accurate alignment of sensors is essential for collaborative perception. V2X-Radar uses GPS/IMU timing and feature-based point cloud registration:

-

Time synchronization: GNSS signals and time boxes achieve nanosecond-level alignment between vehicle and roadside units.

-

Spatial alignment: LiDAR and cameras are calibrated using checkerboard patterns; LiDAR and 4D radar use corner reflectors, achieving centimeter-level accuracy.

This ensures reliable multi-sensor fusion even in dynamic traffic scenarios, providing a strong foundation for research in V2X perception.

Technical Details

Data Scale and Annotation

The dataset contains 540,000 multi-modal frames:

-

20,000 LiDAR frames

-

40,000 camera frames

-

20,000 4D radar frames

It includes 350,000 annotated 3D bounding boxes covering cars, trucks, buses, cyclists, and pedestrians. Annotation is performed using semi-automated tools, with minimal manual correction, ensuring high-quality ground truth for algorithm development.

Algorithm Validation

V2X-Radar exposes two main technical challenges:

-

Communication delay: A 100ms fixed delay reduces mAP by 13.3%-20.49% for methods like F-Cooper, highlighting the importance of low-latency networks.

-

Multi-modal fusion: Combining LiDAR and 4D radar improves mAP to 53.61% in adverse weather, showing the benefits of cross-modal complementarity.

Spatio-temporal compensation algorithms further reduce temporal errors, enhancing the robustness of collaborative perception models.

Applications and Future Directions

V2X-Radar provides value in several areas:

-

Algorithm testing: supports research in LiDAR and 4D radar fusion and collaborative detection

-

Industry standards: establishes guidelines for multi-modal synchronization and annotation

-

Commercial potential: 4D radar penetration reduces deployment costs in smart cities, ports, and logistics applications

Future plans include integrating multi-object tracking, trajectory prediction, and real-world C-V2X network tests, accelerating the transition from laboratory research to real-world deployment.

FAQ

Q1: What is 4D radar?

A1: 4D radar provides distance, angle, and velocity information in real time, enabling detection of moving objects in complex environments (MDPI reference).

Q2: What tasks can V2X-Radar support?

A2: Collaborative detection, roadside-only perception, vehicle-local perception, multi-modal fusion, and multi-object tracking.

Q3: How can researchers access the dataset?

A3: Requests are made via Tsinghua University (V2X-Radar official page).

Q4: Why is multi-modal fusion important?

A4: Combining LiDAR, 4D radar, and cameras reduces blind spots, improves performance in poor weather, and captures occluded objects.

Conclusion

The V2X-Radar dataset is a milestone in autonomous driving perception. Combining 4D radar with LiDAR and cameras addresses long-tail problems that single-vehicle systems cannot solve. As autonomous vehicles advance toward L4/L5 automation, V2X-Radar may reshape the perception technology landscape and unlock new possibilities for intelligent transportation.

For more on 4D millimeter-wave radar in V2X perception, see Linpowave 4D Radar Products.